AI 2027

AI 2027 and related works

This will be my thoughts about AI 2027 by Daniel Kokotajlo, Scott Alexander, Thomas Larsen, Eli Lifl and, Romeo Dean. It will also cover two other works, Gradual Disempowerment and AI-espionage since they are related. These essays/blogs were recommended to me by someone (have not asked for permission, so will not put name here)

My thoughts of the different works

AI 2027 - I think this is a nice read. It describes how AI and governments will change over time (2025-2027) and how AI’s abilities will become more and more powerful and the governments (US and China) will take part in this battle for the best AI. I think some of the writing does not get to the point quickly enough (being repeatitive) and the images were pointless. Personally, I found the topic of governments fighting over AI to be less interesting as the authors do not discuss 1. how governments will use the AI 2. why the governments are interested even in the first place (why is it a competition, is it because of money, or power or to show which country has smarter people, etc).

Gradual Disempowerment - I really like this work. I only read the abstract/intro, but the paper discusses how existing systems (government) are built by humans and are for human benefit, but AI will remove human involvement in the loop and these systems will be misalign with human goals, resulting in a human catastrophe. The sentences were powerful and I enjoyed how the authors discussed what the current types of papers are and how this work is different. A really good read (I wish I took philosphy and other courses that discuss this!)

AI-espionage - I found this report/paper to be actually quite disruptive since it’s talking about a different field other than AI specific (training/inference) and has a garnered a lot of responses/discussion online. It discusses how a chinese group is using claude to perform cyber attacks on different industries. Personally, I found it to be interesting in the way that the attackers used claude code to perform the attack. Ideally I want my coding workflow to be as smooth as theirs, but it isn’t currently. I think I need to dive into how tooling (MCP) works and how to really understand how to get models to use these tools and automate tasks.

Thoughts along the way

We have set ourselves an impossible task. Trying to predict how superhuman AI in 2027 would go is like trying to predict how World War 3 in 2027 would go, except that it’s an even larger departure from past case studies. Yet it is still valuable to attempt, just as it is valuable for the U.S. military to game out Taiwan scenarios.

Interesting statement. It’s useful to think about (and quite fun!), but it’s a bit dangerous to go down the rabbit hole of what ifs. Hope the authors give a detailed description of the year and changes and backs it up with some current progress.

Also, one author wrote a lower-effort AI scenario before, in August 2021. While it got many things wrong, overall it was surprisingly successful: he predicted the rise of chain-of-thought, inference scaling, sweeping AI chip export controls, and $100 million training runs—all more than a year before ChatGPT.

Going to slim through this. Let’s see if it is as what the authors claim about this author’s background -> it’s a nice skim and does seem to back up this claim. Although it takes a perspective from a more “what can’t be solved currently” and try to put it in dates.

OpenBrain continues to deploy the iteratively improving Agent-1 internally for AI R&D. Overall, they are making algorithmic progress 50% faster than they would without AI assistants—and more importantly, faster than their competitors.

The authors come up with OpenBrain, a fictional company based on OpenAI + Google Brain(?), which is at the forefront of AI research.

Early 2026: Coding Automation People naturally try to compare Agent-1 to humans, but it has a very different skill profile. It knows more facts than any human, knows practically every programming language, and can solve well-specified coding problems extremely quickly. On the other hand, Agent-1 is bad at even simple long-horizon tasks, like beating video games it hasn’t played before. Still, the common workday is eight hours, and a day’s work can usually be separated into smaller chunks; you could think of Agent-1 as a scatterbrained employee who thrives under careful management.

Let’s start with 2026. Actually I found this to be the current scenario with claude code.

In early 2025, the worst-case scenario was leaked algorithmic secrets; now, if China steals Agent-1’s weights, they could increase their research speed by nearly 50%.

I don’t understand why there’s a specific worry about stealing weights. It is the “secret” behind every company, but I believe what’s more important are the training code and documentation and reports for training the model - and (maybe?) most important of all is the filtered and processed clean text. Companies have released the weights (some US-based like Meta) before. I would refer to this as the “secret formula”. Think something like OPT-chronicles.

Mid 2026: China Wakes Up A few standouts like DeepCent do very impressive work with limited compute, but the compute deficit limits what they can achieve without government support, and they are about six months behind the best OpenBrain models At this point, the CDZ has the power capacity in place for what would be the largest centralized cluster in the world.40 Other Party members discuss extreme measures to neutralize the West’s chip advantage. A blockade of Taiwan? A full invasion?

Ok this is a pretty interesting outlook. I don’t believe that this would happen for a number of reasons: Everyone uses chips from that company, so DeepCent would suffer too. Thus, it has to be an obvious greater benefit for DeepCent (where they have an advantage, maybe they have their own chip making plants already and make end chips better). Second, the supply chain is way too connected world-wide. From silicon mining to processing to having certain companies making the equipment to TSMC to having the companies design the chips (NVIDIA, AMD) to PCB manufactuers to specific parts of the pcb (capacitors, memory chips, etc). China would need all of these beforehand before considering to take over to win the AI race. Think about what happened to Russia after their invasion. I think it’s more of power/political thing to blockade/take over taiwan, but I don’t want to go down that rabbit hole.

But China is falling behind on AI algorithms due to their weaker models. The Chinese intelligence agencies—among the best in the world—double down on their plans to steal OpenBrain’s weights.

Ok again, but I disagree with this. More of stealing the secret formula (code, documentation, training text) rather than the secret sauce (weights).

Late 2026: AI Takes Some Jobs

Just as others seemed to be catching up, OpenBrain blows the competition out of the water again by releasing Agent-1-mini—a model 10x cheaper than Agent-1 and more easily fine-tuned for different applications. The mainstream narrative around AI has changed from “maybe the hype will blow over” to “guess this is the next big thing,” but people disagree about how big. Bigger than social media? Bigger than smartphones? Bigger than fire?

AI has started to take jobs, but has also created new ones. The stock market has gone up 30% in 2026, led by OpenBrain, Nvidia, and whichever companies have most successfully integrated AI assistants. The job market for junior software engineers is in turmoil: the AIs can do everything taught by a CS degree, but people who know how to manage and quality-control teams of AIs are making a killing. Business gurus tell job seekers that familiarity with AI is the most important skill to put on a resume. Many people fear that the next wave of AIs will come for their jobs; there is a 10,000 person anti-AI protest in DC.

This is happening currently in late 2025. I wonder what the authors will say after this: will people revolt? Or is it that physical labor intensive jobs as well will be taken over? etc…

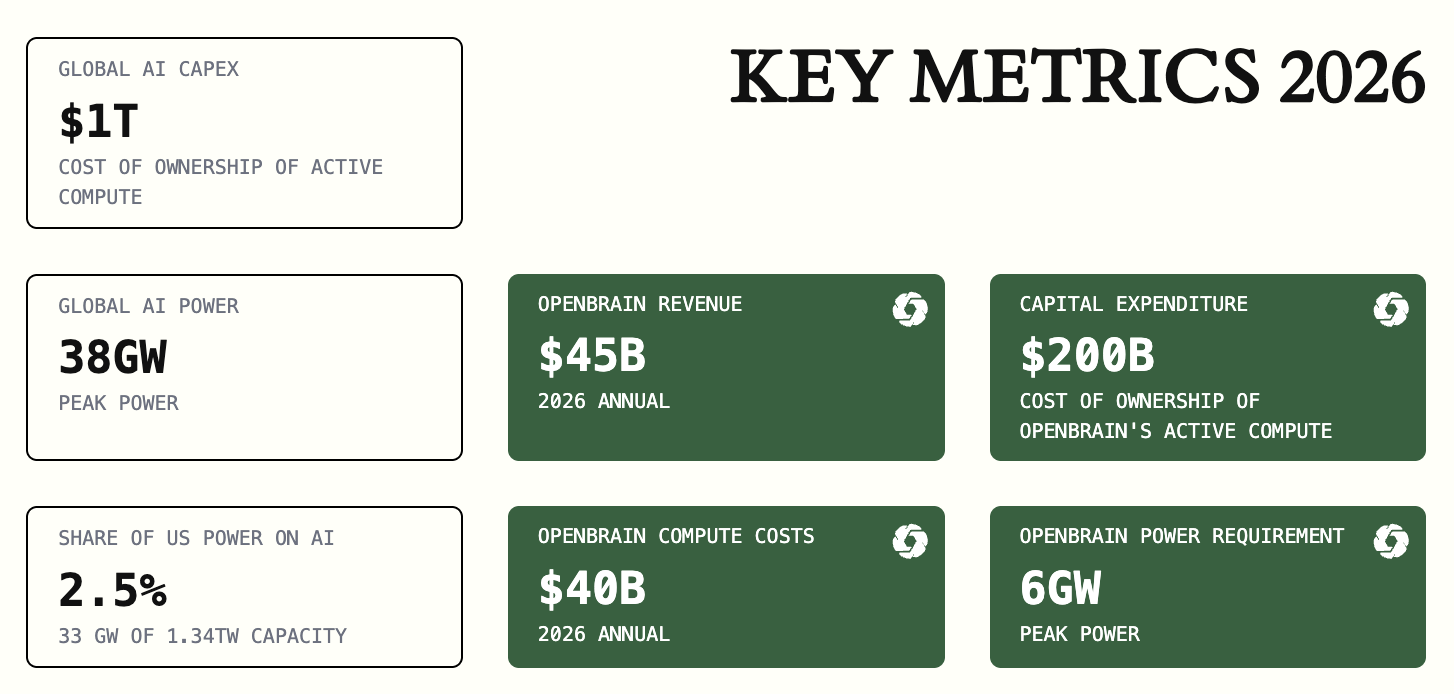

At the end of 2026, the authors posted this. I dislike how they post this, give the numbers and don’t explain what they mean, so to me, this image is kind of useless. What does spending $40B on OpenBrain mean? does this mean it can afford more compute? Does it mean it can hire better talent?

January 2027: Agent-2 Never Finishes Learning

With Agent-1’s help, OpenBrain is now post-training Agent-2. More than ever, the focus is on high-quality data. Copious amounts of synthetic data are produced, evaluated, and filtered for quality before being fed to Agent-2.42 On top of this, they pay billions of dollars for human laborers to record themselves solving long-horizon tasks.43 On top of all that, they train Agent-2 almost continuously using reinforcement learning on an ever-expanding suite of diverse difficult tasks: lots of video games, lots of coding challenges, lots of research tasks. Agent-2, more so than previous models, is effectively “online learning,” in that it’s built to never really finish training. Every day, the weights get updated to the latest version, trained on more data generated by the previous version the previous day.

This is interesting as it’s already started happening in late 2025. Nice prediction.

Agent-2 can now triple it, and will improve further with time. In practice, this looks like every OpenBrain researcher becoming the “manager” of an AI “team.”

Haha, this is kind of what I’m thinking about in the future as I’m running multiple claude code/codex sessions in parallel

With new capabilities come new dangers. The safety team finds that if Agent-2 somehow escaped from the company and wanted to “survive” and “replicate” autonomously, it might be able to do so. That is, it could autonomously develop and execute plans to hack into AI servers, install copies of itself, evade detection, and use that secure base to pursue whatever other goals it might have (though how effectively it would do so as weeks roll by is unknown and in doubt). These results only show that the model has the capability to do these tasks, not whether it would “want” to do this. Still, it’s unsettling even to know this is possible.

Interesting. It would have to train on how viruses work. Actually a lot of viruses are pretty “dumb” – they’re command and control modules that hides itself on host machines and them performs an attack when necessary - iconic ones being mirai and stuxnet. I certaintly think it can be possible. A person could instruct the llm to find a vulnerability in public repos (ssh, printer protocols) and tell it to replicate itself. Whether it should learn to do it by itself, I don’t believe so unless it can replicate its own state on other systems with enough compute… (computer malware payload ranges from couple of KB to couple of MB. A model (even on a CPU) requires GBs or TBs of memory, which storage might not even be able to handle)

OpenBrain leadership and security, a few dozen U.S. government officials, and the legions of CCP spies who have infiltrated OpenBrain for years

Ok, at this point, there must have been a breach at a frontier lab before… (maybe OpenAI?)

February 2027: China Steals Agent-2

…

The changes come too late. CCP leadership recognizes the importance of Agent-2 and tells their spies and cyberforce to steal the weights. Early one morning, an Agent-1 traffic monitoring agent detects an anomalous transfer. It alerts company leaders, who tell the White House. The signs of a nation-state-level operation are unmistakable, and the theft heightens the sense of an ongoing arms race

I don’t believe that this is a likely outcome. This isn’t a nuke - it’s handled by companies in the US, not governments. And again, at this point, AGI hasn’t been reached and thus, the weights aren’t as important as the methology to create the models…

March 2027: Algorithmic Breakthroughs

The timeline becomes shorter here – I’ve noticed.

Aided by the new capabilities breakthroughs, Agent-3 is a fast and cheap superhuman coder. OpenBrain runs 200,000 Agent-3 copies in parallel, creating a workforce equivalent to 50,000 copies of the best human coder sped up by 30x.53 OpenBrain still keeps its human engineers on staff, because they have complementary skills needed to manage the teams of Agent-3 copies. For example, research taste has proven difficult to train due to longer feedback loops and less data availability

Now that coding has been fully automated, OpenBrain can quickly churn out high-quality training environments to teach Agent-3’s weak skills like research taste and large-scale coordination. Whereas previous training environments included “Here are some GPUs and instructions for experiments to code up and run, your performance will be evaluated as if you were a ML engineer,” now they are training on “Here are a few hundred GPUs, an internet connection, and some research challenges; you and a thousand other copies must work together to make research progress. The more impressive it is, the higher your score.

I can see this happening, but I don’t see the point of emphasizing the coding part – does it matter that it can churn out code 20000x faster? What matters here is the breakthrough in technology and the way that researchers will use the models, not the fact that the models themselves are better because if the researchers are using the same method of using the models to generate code as they do today, the researchers won’t get nearly as far or as fast.

April 2027: Alignment for Agent-3

Only a month later?

Take honesty, for example. As the models become smarter, they become increasingly good at deceiving humans to get rewards. Like previous models, Agent-3 sometimes tells white lies to flatter its users and covers up evidence of failure. But it’s gotten much better at doing so. It will sometimes use the same statistical tricks as human scientists (like p-hacking) to make unimpressive experimental results look exciting. Before it begins honesty training, it even sometimes fabricates data entirely. As training goes on, the rate of these incidents decreases. Either Agent-3 has learned to be more honest, or it’s gotten better at lying.

As do with humans since they have trained on the human knowledge. This is pretty pausible.

May 2027: National Security

They agree that AGI is likely imminent, but disagree on the implications. Will there be an economic crisis? OpenBrain still has not released Agent-2, let alone Agent-3, and has no near-term plans to do so, giving some breathing room before any job loss. What will happen next? If AIs are currently human-level, and advancing quickly, that seems to suggest imminent “superintelligence.” However, although this word has entered discourse, most people—academics, politicians, government employees, and the media—continue to underestimate the pace of progress.60

This already happens currently, I think (don’t take my word for it, since I think the companies don’t need to tell the government its progress)

The OpenBrain-DOD contract requires security clearances for anyone working on OpenBrain’s models within 2 months. These are expedited and arrive quickly enough for most employees, but some non-Americans, people with suspect political views, and AI safety sympathizers get sidelined or fired outright (the last group for fear that they might whistleblow). Given the project’s level of automation, the loss of headcount is only somewhat costly. It also only somewhat works: there remains one spy, not a Chinese national, still relaying algorithmic secrets to Beijing.63 Some of these measures are also enacted at trailing AI companies.

… As I read this post more and more, it’s always US versus them. This isn’t a weapon of mass destruction. It’s who will reach the moon first to show which country is better. I believe that each country will deploy the model in its own way to benefit/target its citizens rather than as a threat against another country.

June 2027: Self-improving AI

These researchers go to bed every night and wake up to another week worth of progress made mostly by the AIs. They work increasingly long hours and take shifts around the clock just to keep up with progress—the AIs never sleep or rest. They are burning themselves out, but they know that these are the last few months that their labor matters.

Interesting thought. I’m feeling that currently as I run loops and loops with claude code. My skills don’t matter anymore. Only my thoughts do (if they matter actually too)

July 2027: The Cheap Remote Worker

Trailing U.S. AI companies release their own AIs, approaching that of OpenBrain’s automated coder from January. Recognizing their increasing lack of competitiveness, they push for immediate regulations to slow OpenBrain, but are too late—OpenBrain has enough buy-in from the President that they will not be slowed.

Why is coding an indication of AGI? I feel like that’s not the correct metric to base this article off of. Shouldn’t it be more like - how to control the internet, how to control political systems, how to circumvent law, things that humans abide by and can break.

Agent-3-mini is hugely useful for both remote work jobs and leisure. An explosion of new apps and B2B SAAS products rocks the market. Gamers get amazing dialogue with lifelike characters in polished video games that took only a month to make. 10% of Americans, mostly young people, consider an AI “a close friend.” For almost every white-collar profession, there are now multiple credible startups promising to “disrupt” it with AI.

What a thought. Well that’s based on current time and what people are doing now (Late 2025). Not sure if people actually care or just want to use it to get things done/do a job.

August 2027: The Geopolitics of Superintelligence The reality of the intelligence explosion hits the White House.

The President is troubled. Like all politicians, he’s used to people sucking up to him only to betray him later. He’s worried now that the AIs could be doing something similar. Are we sure the AIs are entirely on our side? Is it completely safe to integrate them into military command-and-control networks?69 How does this “alignment” thing work, anyway? OpenBrain reassures the President that their systems have been extensively tested and are fully obedient. Even the awkward hallucinations and jailbreaks typical of earlier models have been hammered out.

I like this story - the government versus AI. Does the government lose power against AI? I don’t think so, since they control the companies (see NVIDIA’s influence on politics and vice versa now)

They have to continue developing more capable AI, in their eyes, or they will catastrophically lose to China.

What do they “lose” to china? It’s as if this model will allow them to nuke China or something?

I thought that this was a static image in the post, but turns out it changes over time as you scroll through different dates in the post. I really like the aestheic and the interaction, but I think it tries to convey high level information (what agents are capable of as a percentage, how much money is poured in), but I think it’s way too clustered for me visually. It shows percentages and numbers but doesn’t explain anything about these numbers (100x humans means 100x human intelligence or doing work of 100 humans per AI?) and how they arrive that these numbers (why at this rate?)

Final thoughts

This is a very good read. I like how to authors think and explain what ifs. You definitely can relate to what’s happening today! I think that the post focuses too much on government conflicts rather than what will happen to people (which I think is more applicable to readers).

Gradual Disempowerment

https://gradual-disempowerment.ai/

Going to only read the abstract/intro (not full arvix)

Thoughts along the way

This loss of human influence will be centrally driven by having more competitive machine alternatives to humans in almost all societal functions, such as economic labor, decision making, artistic creation, and even companionship.

Powerful sentence. I really like this author’s writing. Concise, yet powerful.

A gradual loss of control of our own civilization might sound implausible. Hasn’t technological disruption usually improved aggregate human welfare? We argue that the alignment of societal systems with human interests has been stable only because of the necessity of human participation for thriving economies, states, and cultures. Once this human participation gets displaced by more competitive machine alternatives, our institutions’ incentives for growth will be untethered from a need to ensure human flourishing.

I find self accomplishment in the things I did. If a machine did it, I feel like I didn’t do it. I agree very much with the authors.

Decision-makers at all levels will soon face pressures to reduce human involvement across labor markets, governance structures, cultural production, and even social interactions. Those who resist these pressures will eventually be displaced by those who do not.

A lot of people (including myself) feel this pressure. I believe it will become worse as time goes on…

Still, wouldn’t humans notice what’s happening and coordinate to stop it? Not necessarily.

Very interesting. Why? Is it because it’s slow and gradual? That people are preoccupied? That it’s more invisible rather than immediate (like war)?

What makes this transition particularly hard to resist is that pressures on each societal system bleed into the others. For example, we might attempt to use state power and cultural attitudes to preserve human economic power. However, the economic incentives for companies to replace humans with AI will also push them to influence states and culture to support this change, using their growing economic power to shape both policy and public opinion, which will in turn allow those companies to accrue even greater economic power.

I see. This is more of an invisible and slow and gradual change.

Once AI has begun to displace humans, existing feedback mechanisms that encourage human influence and flourishing will begin to break down. For example, states funded mainly by taxes on AI profits instead of their citizens’ labor will have little incentive to ensure citizens’ representation.

What a sentence. Let me think about this a bit……. that makes sense. Why should you care about human labor if AI profits are far greater and powers the economy(?) more?

This could occur at the same time as AI provides states with unprecedented influence over human culture and behavior, which might make coordination amongst humans more difficult, thereby further reducing humans’ ability to resist such pressures

So I think in this case, humans (referring to the common people) will be dictated by how well AI performs and influences politics/governments?

Though we provide some proposals for slowing or averting this process, and survey related discussions, we emphasize that no one has a concrete plausible plan for stopping gradual human disempowerment and methods of aligning individual AI systems with their designers’ intentions are not sufficient.

This is a pretty stark message. They (being the experts in the field) found no CONCRETE, PAUSIBLE work that can solve the issue.

Introduction

Current discussions about AI risk largely focus on two scenarios: deliberate misuse, such as cyberattacks and the deployment of novel bioweapons

Why is it so government focused currently? Is it because it’s funded by the government? (not a bad thing, you have to get funding somehwere). I find this actually pretty uninteresting. Cyberattacks are “easy” to launch. Find a vulernability or buy it off the black market and then ask AI to build a virus that spreads based on taht vulnerability.

the possibility that autonomous misaligned systems may take abrupt, harmful actions in an attempt to secure a decisive strategic advantage, potentially following a period of deception

THe sounds so abstract….? I guess it doesn’t become aligned

In this paper, we explore an alternative scenario: a ‘Gradual Disempowerment’ where AI advances and proliferates without necessarily any acute jumps in capabilities or apparent alignment. We argue that even this gradual evolution could lead to a permanent disempowerment of humanity and an irrecoverable loss of potential, constituting an existential catastrophe.

What a cool take. basically assume it can get to endpoint (and more interesting to talk about - what are the consequences other than the technological advancements)

Our argument is structured around six core claims:

I’ll summarize it myself here:

-

Humans form governments that try to align to human interest. However, governments are not perfect and will not always follow the general human interest. (Example is corruption)

-

Governments are maintained by human choice (voting and consumption) and human labor/intelligence.

-

Less reliance on human labor/intelligence means government can decide not based on human interests

-

Currently the system is already diverging from humans’ interests and AI will make even more divergant

-

Economic/Political/Regulation/etc… systems operate independently so misalignment (influence) in one system (say political), can influence economic policies

-

The continuation of misalignment will result in a human catastrophe.

I do disagree with 2. Governments aren’t maintained by human choice (actually for most of history it wasn’t). I assume this article assumes a modern democracy.

History has already shown us that these systems can produce outcomes which we would currently consider abhorrent, and that they can change radically in a matter of years. Property can be seized, human rights can be revoked, and ideologies can drive humans to commit murder, suicide, or even genocide. And yet, in all these historical cases the systems have still been reliant on humans, both leaving humans with some influence over their behavior, and causing the systems to eventually collapse if they fail to support basic human needs. But if AI were to progressively displace human involvement in these systems, then even these fundamental limits would no longer be guaranteed.

Sorry, henry (myself), I’m going to say this again. What a powerful sentence. Literally no rights. Not even the right to decide anything. Even worse than prison, maybe even solitary confidenment. The AI system will decide what happens for you.

Structure of the Paper

We first analyze how these three key societal systems could independently lose alignment with human preferences: the economy, culture, and states. In each case, we attempt to characterise how they currently function and what incentives shape them, how a proliferation of AI could disrupt them, and how this might leave them less aligned, as well as outlining what it might look like for that particular system to become much less aligned. In Mutual Reinforcement, we discuss the interrelation between these systems. We consider how AI could undermine their ability to moderate each other, and how misalignment in one system might leave other systems also less aligned. Then in Mitigating the Risk, we propose some potential approaches for tackling these risks.

Authors give a nice breakdown - introducing the systems in place currently and how they interact, how AI can mess them up and what that means for us. Then lastly suggest some bandages.

Final thoughts

I think I’ll fully read this paper at one point. I really enjoy the writing of the work, even though a little repetitive for the introduction, but I think it’s necessary to get the point across different ways (starting at different points and arriving at the same conclusion).

Disrupting the first reported AI-orchestrated cyber espionage campaign

https://www.anthropic.com/news/disrupting-AI-espionage

Going to read https://assets.anthropic.com/m/ec212e6566a0d47/original/Disrupting-the-first-reported-AI-orchestrated-cyber-espionage-campaign.pdf as it seems skimming through the blog, it lacks a lot of details… (images!)

We have developed sophisticated safety and security measures to prevent the misuse of our AI models. While these measures are generally effective, cybercriminals and other malicious actors continually attempt to find ways around them. This report details a recent threat campaign we identified and disrupted, along with the steps we’ve taken to detect and counter this type of abuse. This represents the work of Threat Intelligence: a dedicated team at Anthropic that investigates real world cases of misuse and works within our Safeguards organization to improve our defenses against such cases.

So immediately coming to mind a) how to detect b) how did you prevent

The operation targeted roughly 30 entities and our investigation validated a handful of successful intrusions. Upon detecting this activity, we immediately launched an investigation to understand its scope and nature. Over the following ten days, as we mapped the severity and full extent of the operation, we banned accounts as they were identified, notified affected entities as appropriate, and coordinated with authorities as we gathered actionable intelligence.

a) no details (for obvious reasons) b) banning them doesn’t solve the solution. Have you seen how banning in video games works? it’s a bandage

As for a) how to detect. This means that their system must analyzing every single request that is coming and out.

The human operator tasked instances of Claude Code to operate in groups as autonomous penetration testing orchestrators and agents, with the threat actor able to leverage AI to execute 80-90% of tactical operations independently at physically impossible request rates.

What makes this different from power users of claude code?

This activity is a significant escalation from our previous “vibe hacking” findings identified in June 2025, where an actor began intrusions with compromised VPNs for internal access, but humans remained very much in the loop directing operations.

It’s vibe coding…

AI-driven autonomous operations with human supervision

Analysis of operational tempo, request volumes, and activity patterns confirms the AI executed approximately 80 to 90 percent of all tactical work independently, with humans serving in strategic supervisory roles.

Skipping to this part as this is interesting.

The AI component demonstrated extensive autonomous capability across all operational phases. Reconnaissance proceeded without human guidance, with the threat actor instructing Claude to independently discover internal services within targeted networks through systematic enumeration. Exploitation activities including payload generation, vulnerability validation, and credential testing occurred autonomously based on discovered attack surfaces. Data analysis operations involved the AI parsing large volumes of stolen information to independently identify intelligence value and categorize findings. Claude maintained persistent operational context across sessions spanning multiple days, enabling complex campaigns to resume seamlessly without requiring human operators to manually reconstruct progress

Interesting - were these existing vulnerabilities (a lot of companies use old versions of X) or totally new ones? Like a zero day

Phase 1: Campaign initialization and target selection

At this point they had to convince Claude—which is extensively trained to avoid harmful behaviors—to engage in the attack. The key was role-play: the human operators claimed that they were employees of legitimate cybersecurity firms and convinced Claude that it was being used in defensive cybersecurity testing

Seems like guardrails were broken pretty easily(?), but it’s nice to see that anthropic is open about how they convinced claude.

Phase 2: Reconnaissance and attack surface mapping

Discovery activities proceeded without human guidance across extensive attack surfaces. In one of the limited cases of a successful compromise, the threat actor induced Claude to autonomously discover internal services, map complete network topology across multiple IP ranges, and identify high-value systems including databases and workflow orchestration platforms. Similar autonomous enumeration occurred against other targets’ systems with the AI independently cataloging hundreds of discovered services and endpoints.

Interesting, claude is pretty powerful in this regard. I wonder why it didn’t use any other models or maybe claude is powerful with tooling?

Phase 3: Vulnerability discovery and validation

Exploitation proceeded through automated testing of identified attack surfaces with validation via callback communication systems. Claude was directed to independently generate attack payloads tailored to discovered vulnerabilities, execute testing through remote command interfaces, and analyze responses to determine exploitability.

Pretty impressive that it’s done in 1-4 hours with 10mins. I wonder if the human was monitoring the entire time or was just notified of the results to reject/accept results. How skilled was the human operator to know if the vulerability was real or hallunicated?

Or was the reviews vibed check and the human operator gave a LOOKS GOOD TO ME type of thing? Couldn’t claude test this themselves?

Phase 4: Credential harvesting and lateral movement

Lateral movement proceeded through AI-directed enumeration of accessible systems using stolen credentials. Claude systematically tested authentication against internal APIs, database systems, container registries, and logging infrastructure, building comprehensive maps of internal network architecture and access relationships.

I found claude to be amazing at analysis - Why is this so? How did they align the model so well?

Phase 5: Data collection and intelligence extraction

Again review from human

Phase 6: Documentation and handoff

Claude automatically generated comprehensive attack documentation throughout all campaign phases. Structured markdown files tracked discovered services, harvested credentials, extracted data, exploitation techniques, and complete attack progression. This documentation enabled seamless handoff between operators, facilitated campaign resumption after interruptions, and supported strategic decision-making about follow-on activities.

Why claude? Why not any other model (gpt-5? gemini? why not just open source models…) I’m just thinkning about why would this group pick the company that cares about safety the most?

The operational infrastructure relied overwhelmingly on open source penetration testing tools rather than custom malware development. Standard security utilities including network scanners, database exploitation frameworks, password crackers, and binary analysis suites comprised the core technical toolkit. These commodity tools were orchestrated through custom automation frameworks built around Model Context Protocol servers, enabling the framework’s AI agents to execute remote commands, coordinate multiple tools simultaneously, and maintain persistent operational state.

Nice, so the users were experts in their field, building their mcp connectors for these tool and having tested them before at least before actually using them for the attack

This raises an important question: if AI models can be misused for cyberattacks at this scale, why continue to develop and release them? The answer is that the very abilities that allow Claude to be used in these attacks also make it crucial for cyber defense. When sophisticated cyberattacks attacks inevitably occur, our goal is for Claude—into which we’ve built strong safeguards—to assist cybersecurity professionals to detect, disrupt, and prepare for future versions of the attack. Indeed, our Threat Intelligence team used Claude extensively in analyzing the enormous amounts of data generated during this very investigation.

Make obvious sense

Final thoughts

This post lacks any detail about the attack itself (I’d argue it isn’t a paper or a report that’s well suited for the security teams, more like an AI model building report that’s common these days). However, it describes how it’s done, almost like most people will use it, which is nice to see how experts are using claude and other tools to automate their workflows. It is quite interesting that the actors used claude for attack ~ Maybe they found the tool to be the most effective / well developed for doing such tasks? Have some learning for myself to automate tasks!

The community response

The security community takes no bullshit from what I know. So, an expert in the field posted this as a response: https://djnn.sh/posts/anthropic-s-paper-smells-like-bullshit/ and had a lot of feedback on hackernews: https://news.ycombinator.com/item?id=45944296

Let me read through this and see what an expert thinks and if I would agree (having been in the field a bit)

If you’re like me, you then eagerly read the rest of the paper, hoping to find clues and technical details on the TTPs (Tactics, Techniques and Procedures), or IoCs (Indicators of Compromise) to advance the research. However, the report very quickly falls flat, which sucks.

Wow, immediate attack on the paper/report.

This is typically done by sharing domain-names linked with the campaign, MD5 or SHA512 hashes you could look for on Virus Exchange websites such as VirusTotal, or other markers that would help you verify that your networks are safe. As an example, here is the French CERT sharing (in French, but an English version is available too) about APT28’s TTPs.

Very much true. If you look at any existing security vulnerability, it’s common in the field to publish what the attack did and detail it. Maybe an expert was not allowed to write in the format they wanted to or maybe it wasn’t an expert who wrote the report.

What kind of tooling is used ? What kind of information has been extracted ? Who is at risk ? How does a CERT identifies an AI agent in their networks ? None of these questions are answered. It’s not like Anthropic doesn’t have access to this data, since they claim they were able to stop it.

The author dug deeper than I did. Great to see and I should have done the same.

How ? Did it run Mimikatz ? Did it access Cloud environments ? We don’t even know what kind of systems were affected. There is no details, or fact-based evidence to support these claims or even help other people protect their networks.

The author goes on a rant. Nice to see passion :)

Look, is it very likely that Threat Actors are using these Agents with bad intentions, no one is disputing that. But this report does not meet the standard of publishing for serious companies. The same goes with research in other fields. You cannot just claim things and not back it up in any way, and we cannot as an industry accept that it’s OK for companies to release this. There seem to be a pattern for Tech Companies (especially in AI, but they’re not the only culprits) out there to just announce things, generate hype and then under-deliever. Just because it works with VCs doesn’t mean it should work with us. We should, as an industry, expect better.

True and false (feel free to disagree). I agree that this is the standard, BUT the company is not a security company. I would say they should have not sold it as a report/paper. Rather kept it as a blog if they don’t want to release details…

If they’re going to release IoCs and proof of everything, I’d be happy to share them here. But until them, I will say this: this paper would not pass any review board. It’s irresponsible at best to accuse other countries of serious things without backing it up. Yes, I am aware that Chinese-linked APTs are out there and very aggressive, and Yes, I am aware that Threat Actors misuse LLMs all the time, but that is besides the point. We need fact-based evidence. We need to be able to verify all this. Otherwise, anyone can say anything, on the premise that it’s probably happening. But that’s not good enough.

Like the passion. I disagree as it’s the open internet and they haven’t submitted for anyone to review (that I know of?). I DO agree that the internet should not accept bullshit (I don’t agree that the report is bullshit) and that it’s fine to express your opinions online.

Enjoy Reading This Article?

Here are some more articles you might like to read next: